If video data isn’t labeled right, machine learning won’t work in computer vision. In this thorough guide on video annotation for machine learning, we explain annotation types, discuss challenges and show you step by step, from data preparation to quality control and review, how video training datasets for machine learning are made.

Contents

- What is video annotation?

- How is video annotation used in machine learning?

- The role of video annotation in machine learning

- Types of video annotation for machine learning

- Challenges in video annotation for machine learning

- Step-by-Step guide to video annotation

- Efficient video annotation best practices

- AI and automation in video annotation

- Using video datasets for machine learning

- Enhancing video analysis with deep learning models

- Conclusion

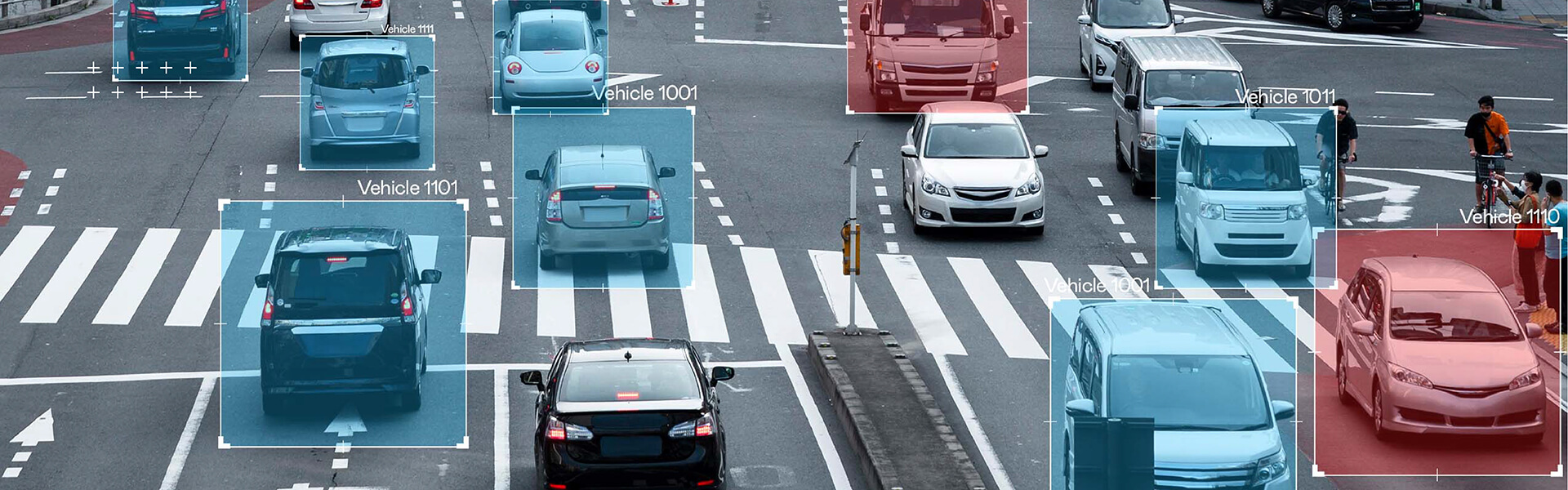

Video annotation for machine learning is the silent architect behind AI systems that understand motion and meaning in dynamic visuals. By tagging video frames with precision, it enables machine learning models to detect objects, track movements, and interpret complex scenarios.

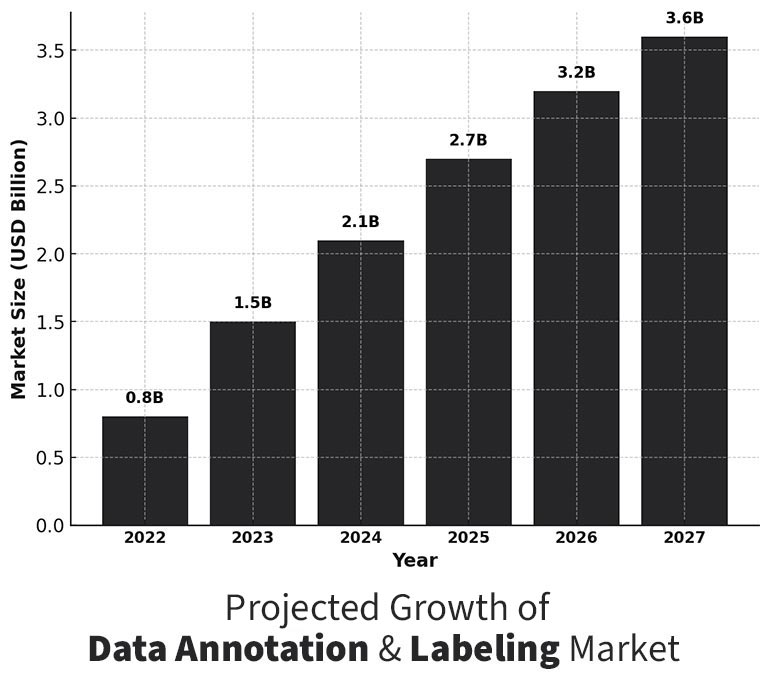

As AI adoption expands across industries like autonomous vehicles, healthcare, and surveillance, the demand for professional video annotation services is at an all-time high. In fact, the global data annotation and labeling market is expected to grow from USD 0.8 billion in 2022 to USD 3.6 billion by 2027, at a CAGR of 33.2%. This rapid growth underscores the essential role of video annotation in enhancing AI accuracy and scalability.

As industries push for more advanced AI-driven solutions, precise and scalable video annotation has become a necessity rather than an option. Annotating a 10-minute video at 30 frames per second requires labeling nearly 18,000 frames, making it a resource-intensive yet critical step in AI model training. The ability to generate high-quality annotations ensures that machine learning systems can effectively recognize object interactions, predict movements, and analyze real-world environments with greater accuracy.

In this video annotation guide, we explore the core role of video annotation, why it matters, and how it is shaping the future of AI-powered computer vision.

What is video annotation?

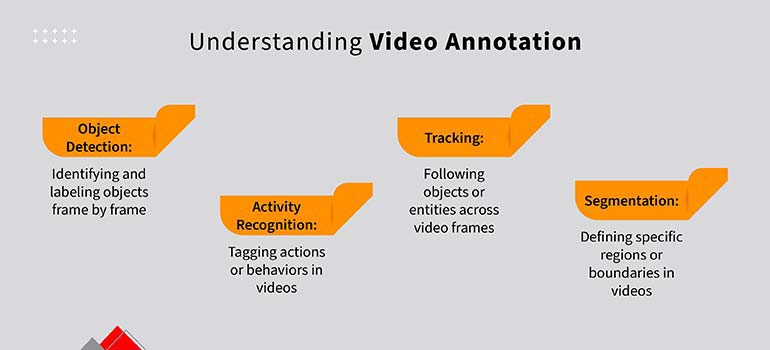

Video annotation is the process of labeling objects, events or scenes within a video to create structured data for machine learning. Its primary purpose is to help AI models understand and analyze dynamic visual information effectively.

How is video annotation used in machine learning?

Annotated videos serve as training datasets for AI models to recognize patterns, track movements, and predict outcomes in real time. This enables AI to classify and monitor objects or actions in practical applications, such as autonomous vehicles and surveillance systems.

The role of video annotation in machine learning

High-quality annotated video data serves as the foundation for developing accurate and reliable AI models. Poorly annotated data can introduce biases, reduce model performance, and lead to errors in critical applications, such as autonomous driving or medical diagnostics.

Quality annotations ensure that AI systems learn to interpret complex visual data accurately, which is essential for achieving robust and scalable solutions. Additionally, consistent and precise annotations help reduce the need for repeated training cycles, saving both time and computational resources. Video annotation has far-reaching applications across multiple sectors, transforming how industries operate in autonomous driving, healthcare, retail, sports and security.

Boost your ML Project’s accuracy with expert video annotation services.

Get started now! »Types of video annotation for machine learning

Video annotation is a critical step in training machine learning models to interpret and analyze video data effectively. It involves labeling various elements in video frames to create datasets that enhance the accuracy of computer vision systems.

Here are the main types of video annotation for machine learning:

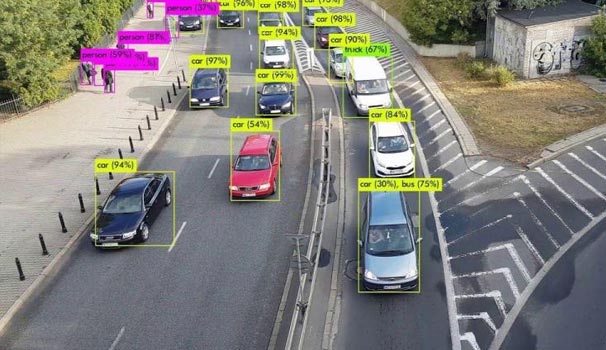

Bounding Boxes

Bounding boxes are one of the most commonly used annotation techniques. This method involves drawing rectangular boxes around objects in a video to identify their location and size. Annotators mark each frame to highlight specific objects, such as cars, pedestrians or animals, enabling machine learning models to detect and classify these entities.

Bounding boxes are widely used in applications like autonomous driving, surveillance systems, and object detection algorithms.

Semantic Segmentation

Semantic segmentation involves labeling each pixel in a video frame to a particular class or object, offering a more granular understanding of the scene. This process helps distinguish between foreground and background elements or categorizes different regions within a video. Semantic segmentation use cases include medical imaging, environmental monitoring, and video editing, where precise classification is essential.

Keypoint Annotation

Keypoint annotation focuses on identifying specific points or landmarks on objects or subjects in a video. For instance, keypoints can represent joints in a human body for pose estimation or facial landmarks for emotion detection. This technique is vital in applications like human motion tracking, sports analysis, and augmented reality.

Object Tracking

Object tracking involves annotating and following the movement of objects across multiple video frames. This technique ensures temporal consistency in labeling, enabling the analysis of dynamic behaviors. Object tracking is crucial for use cases like activity recognition, traffic monitoring, and wildlife tracking.

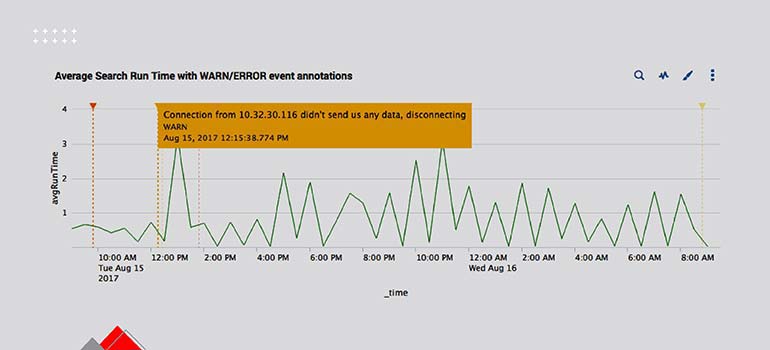

Event Annotation

Event annotation entails marking specific events or actions within a video, such as interactions between objects, gestures or environmental changes. Annotators tag timeframes where these events occur, providing data for tasks like activity recognition, video summarization, and security system enhancements.

Each type of video annotation technique serves a unique purpose, addressing diverse needs in machine learning and AI-driven applications.

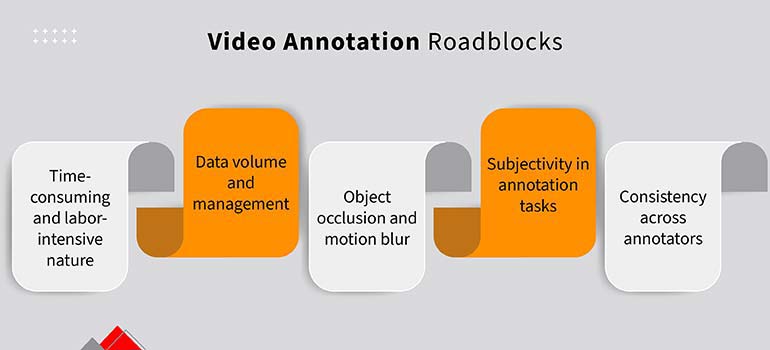

Challenges in video annotation for machine learning

Video annotation has unique challenges that require innovative solutions. Below are the major hurdles faced in this process:

1. Time-consuming and labor-intensive

Labeling thousands of frames is repetitive and demands precision. Despite automation, human oversight creates bottlenecks in large-scale projects.

2. Data volume and management

Managing the terabytes of video data requires robust infrastructure, efficient workflows, and seamless tool integration for storage, retrieval and processing.

3. Object occlusion and motion blur

Partially hidden objects and motion blurs create labeling ambiguity. For instance, tracking obscured pedestrians in autonomous driving requires interpolation to maintain safety-critical precision.

4. Subjectivity in annotation

Tasks like labeling emotions or gestures are prone to human interpretation. For example, defining “aggressive” behavior varies culturally. Clear guidelines and reviews minimize subjectivity.

5. Ensuring consistency

Maintaining consistency across annotators is challenging. Labeling discrepancies affect model performance, requiring thorough training, detailed protocols and regular audits.

Transform your video data into actionable insights

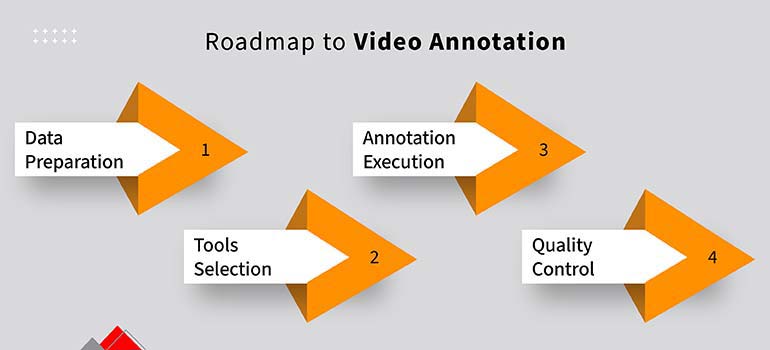

Contact our experts! »Step-by-Step guide to video annotation

Video annotation is a critical step in training machine learning models for tasks such as object detection in video, activity recognition and scene understanding. It involves labeling video data to help AI systems recognize patterns and make accurate predictions.

Here’s a simple 4-step guide to ensure efficient and precise video annotation.

1. Data preparation

- Start by selecting relevant videos that align with your project’s objectives, such as object detection or action recognition.

- Ensure the dataset includes diverse scenarios to cover edge cases.

- Define clear annotation guidelines, detailing class definitions, labeling conventions, and instructions for handling challenging scenarios like occlusion or motion blur.

- A well-prepared dataset and guidelines ensure annotators work consistently and minimize ambiguities.

2. Tool selection

- Choose a video annotation tool that meets your project needs.

- Popular tools include V7 Labs, Labelbox and SuperAnnotate, offering features like automated tracking and collaboration.

- Open-source options like CVAT and LabelImg are cost-effective and customizable for smaller projects.

- Consider factors like AI-assisted labeling, user-friendliness, and compatibility with data formats to streamline the annotation process.

3. Annotation execution

- Use automated features for repetitive tasks, such as bounding box propagation or keypoint interpolation, and manually refine the results.

- Pay attention to challenging frames with occlusions or motion blurs, adhering strictly to annotation guidelines.

- Divide tasks into smaller chunks to maintain focus and prevent annotator fatigue.

- Regularly save progress to safeguard data and use collaborative platforms to enable real-time feedback and issue resolution.

4. Quality control and review

- Implement a multi-step review process, including peer reviews and automated validation for errors or inconsistencies.

- Use spot checks and inter-annotator agreement metrics to ensure adherence to guidelines and to improve team consistency.

- Provide annotators with actionable feedback to refine their work and address recurring issues.

- High-quality annotations reduce downstream errors, saving time and enhancing the reliability of machine learning models.

Annotation of live video streams for traffic management and road planning

HabileData delivered live video stream annotation services to improve traffic management and road planning. By accurately annotating vehicles, pedestrians and road elements in real-time footage, we enabled our client to optimize the urban infrastructure. The result: Enhanced traffic flow insights, improved safety measures, and data-driven road planning decisions.

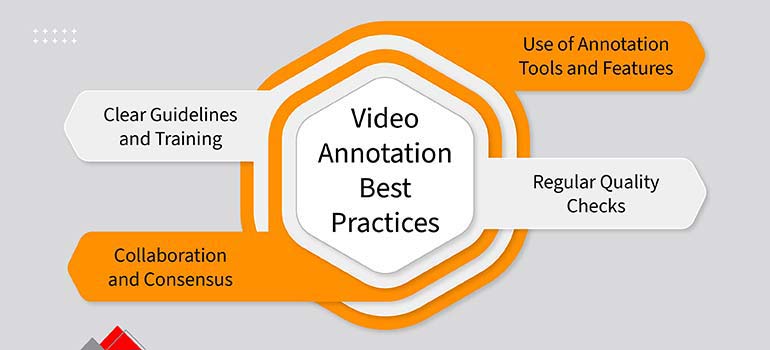

Read full case study »Efficient video annotation best practices

Video annotation isn’t just about labeling data. It’s about building models that see the world as we do. The right tools, the right strategy, and a focus on consistency turn complex frames into actionable insights. This section explores proven practices to make your annotation faster, smarter and sharper.

1. Clear guidelines and training

Clear guidelines and training form the backbone of successful video annotation projects, ensuring annotators perform their tasks accurately and efficiently. From creating comprehensive instructions to offering continuous support, these measures empower teams to tackle challenges, maintain consistency and deliver high-quality annotations that drive reliable machine learning outcomes.

- Develop Comprehensive Annotation Guidelines: Provide annotators with detailed, easy-to-understand guidelines that outline the project objectives, class definitions, labeling standards, and handling of edge cases such as motion blur, object occlusion, or overlapping objects.

- Incorporate Visual Examples: Use annotated examples of correct and incorrect labels to make expectations clear and help annotators better understand complex scenarios.

- Conduct Training Sessions: Organize training programs to familiarize annotators with the tools, workflows and standards. Simulate real-world challenges during training to prepare annotators for potential difficulties.

- Ongoing Support and Updates: Regularly update guidelines as new edge cases or challenges arise and ensure annotators receive timely updates to maintain quality standards.

2. Use of annotation tools and features

The efficient use of annotation tools and features can significantly enhance the accuracy and productivity of video annotation projects. By selecting the right tools, leveraging advanced functionalities, and fostering collaboration through customizable workflows, teams can streamline the process, reduce manual effort, and deliver consistent, high-quality results tailored to project goals.

- Select the Right Tools: Choose annotation tools that align with your project requirements. Popular video labeling tools like V7 Labs, Labelbox, and SuperAnnotate offer features such as AI-assisted labeling and automated tracking for repetitive tasks.

- Leverage Advanced Features: Use automation features like bounding box propagation, keypoint interpolation, and object tracking to save time while ensuring consistent annotations.

- Customizable Workflows: Opt for tools that allow customization, such as defining specific annotation classes or creating shortcuts for common tasks, to enhance productivity.

- Collaborative Features: Use tools that support team collaboration with features like real-time feedback, shared annotation projects, and integrated communication.

3. Collaboration and consensus mechanisms

Collaboration and consensus mechanisms ensure a cohesive approach to video annotation by fostering teamwork, resolving disagreements, measuring consistency and providing feedback, ultimately improving the accuracy and reliability of annotations across the entire project.

- Promote Team Collaboration: Use platforms that enable annotators to share their work, discuss challenges, and seek clarifications in real time.

- Consensus-Based Review: Implement a process where disagreements in annotations are resolved collectively through team discussions or by senior reviewers to ensure uniformity.

- Inter-Annotator Agreement Metrics: Use tools and processes to measure the level of agreement among annotators on complex tasks and refine guidelines to address inconsistencies.

- Feedback Loops: Provide annotators with constructive feedback on their work to encourage continuous improvement and alignment with project goals.

4. Regular quality checks

Regular quality checks are essential to maintaining high standards in video annotation projects. By combining automated validation, peer reviews, and metric tracking, along with an iterative feedback process, teams can identify and address errors, refine workflows, and ensure consistent, accurate annotations that meet project objectives.

- Automated Quality Validation: Use automated tools to detect common errors such as misaligned bounding boxes, incorrect labels, or inconsistencies in annotations across frames.

- Spot Checks and Peer Reviews: Conduct random checks of annotated frames and involve peers to review each other’s work for added accountability and accuracy.

- Track Key Metrics: Monitor annotation accuracy, precision and inter-annotator agreement to identify patterns of error and areas for improvement.

- Iterative Quality Improvement: Establish a review cycle in which feedback from quality checks is used to update guidelines, refine workflows, and retrain annotators, ensuring long-term consistency.

By following these detailed best practices, video annotation projects can achieve high-quality outputs that support reliable machine learning model performance while optimizing time and resources.

AI and automation in video annotation

AI-assisted object tracking leverages deep learning and optical flow techniques to maintain object continuity across frames, even in complex, dynamic or partially obscured scenarios. This minimizes repetitive tasks while ensuring precise labeling. Automated quality control further verifies annotations against set standards, as machine learning models detect errors and ensure consistency. This reduces the time spent on manual checks while maintaining high reliability.

Automated video labeling techniques

AI offers several advanced techniques that optimize video labeling for efficiency and precision:

- Transfer Learning: By using pre-trained models, transfer learning enables domain-specific video annotation tasks with minimal training. It builds on existing knowledge, accelerating processes while maintaining accuracy—making it ideal for industries like autonomous vehicles and healthcare.

- Active Learning: AI identifies the most challenging segments for annotation and directs human annotators to focus on these areas. The AI model learns from its inputs and improves its performance over time. This ensures time and resources are allocated where they have the most impact.

- Semi-Supervised Learning: Combining a small set of labeled data with a large pool of unlabeled data, semi-supervised learning allows AI to extrapolate patterns and annotate unlabeled segments. This technique scales annotation projects efficiently without compromising quality.

Through these techniques, AI-powered video annotation and labeling drive faster, more cost-effective results, unlocking deeper insights across industries.

AI-powered video annotation supports various industries with robust, real-world applications:

Using video datasets for machine learning

High-quality, diverse video datasets are critical for training AI models that perform well across various scenarios. While quality annotations ensure accuracy, diversity allows AI to generalize effectively, enhancing real-world application performance.

Key Datasets for Video Annotation:

- Kinetics: A comprehensive dataset for action recognition with a wide range of human activities.

- UCF101: A popular dataset for video classification featuring 101 action categories.

- MOT (Multiple Object Tracking): Designed for complex object tracking tasks involving multiple dynamic objects.

- Cityscapes: Tailored for autonomous driving, focusing on urban scene segmentation and object recognition.

When selecting datasets, prioritize relevance, annotation quality, and dataset size. For improved outcomes, combine training, validation and test sets and use augmentation techniques like flipping or cropping to enhance diversity. Choosing the right datasets ensures adaptability and accuracy in AI models.

Enhancing video analysis with deep learning models

Deep learning has transformed video analysis by enabling tasks such as object detection, action recognition and scene segmentation with remarkable precision. These models capture intricate spatial and temporal patterns across frames, providing deeper insights into video data.

Popular Models for Video Analysis:

- C3D Networks: Use 3D convolutional layers to simultaneously analyze spatial and temporal features that are ideal for video classification tasks.

- Two-Stream Networks: Process RGB frames and optical flow separately to combine spatial and motion information, excelling at action recognition.

- RNNs (Recurrent Neural Networks): Analyze sequential data to recognize patterns over time, such as activity detection or behavior tracking.

- Transformer-Based Models: Utilize attention mechanisms to model long-term dependencies in video frames, making them effective for video captioning and summarization.

Conclusion

In a world where AI-driven solutions are shaping industries, video annotation is emerging as the backbone for building intelligent, real-time systems. From self-driving cars to precision healthcare and immersive virtual experiences, the accuracy of video data annotations determines the success of these innovations.

By combining the right tools, workflows and human expertise, businesses can harness video annotation trends to not just keep up with the pace of AI advancements but to lead them. As you embark on your video annotation journey, remember that precise annotations today pave the way for smarter, more efficient AI applications tomorrow.

Accelerate your AI training with precision-driven video annotation services.

Contact Us Now! »

Snehal Joshi heads the business process management vertical at HabileData, the company offering quality data processing services to companies worldwide. He has successfully built, deployed and managed more than 40 data processing management, research and analysis and image intelligence solutions in the last 20 years. Snehal leverages innovation, smart tooling and digitalization across functions and domains to empower organizations to unlock the potential of their business data.